FOX Business host Charles Payne gives his take on artificial intelligence on ‘Making Money.’

Hackers are with working the White House to find laws in artificial intelligence systems.

OpenAI, the maker of chatbot ChatGPT, and other providers like Google and Microsoft are letting thousands test the tech, including how it could be manipulated to cause harm or if it would share private information.

“This is why we need thousands of people,” Rumman Chowdhury, lead coordinator of the mass hacking event planned for this summer’s DEF CON 31 hacker convention in Las Vegas, told The Associated Press. “We need a lot of people with a wide range of lived experiences, subject matter expertise and backgrounds hacking at these models and trying to find problems that can then go be fixed.”

Officials first noted the idea of a mass hack in March at the South by Southwest festival, where AI leaders held a workshop that invited community college students to hack an AI model.

FLOWER DELIVERY SERVICE LAUNCHES AI BOT ‘MOMVERSE’ THAT CAN WRITE POEMS FOR MOTHER’S DAY

Sam Altman, then-president of Y Combinator, pauses during the New Work Summit in Half Moon Bay, California, on Feb. 25, 2019. (David Paul Morris/Bloomberg via / Getty Images)

Austin Carson, president of responsible AI nonprofit SeedAI, helped with that workshop, telling the agency that conversations evolved into a proposal to test AI language models following the White House’s Blueprint for an AI Bill of Rights. That blueprint was released late last year.

This month, the Biden administration described new efforts to “further promote responsible American innovation in artificial intelligence and protect people’s rights and safety,” including $140 million in funding to launch seven new National AI Research Institutes and the release draft policy guidance from the Office of Management and Budget on the use of AI systems by the U.S. government for public comment.

Rumman Chowdhury, co-founder of Humane Intelligence, a nonprofit developing accountable AI systems, at her home Monday, May 8, 2023, in Katy, Texas. (David J. Phillip / AP Newsroom)

The announcements also noted that leading AI developers had committed to participate in a public evaluation of AI systems – on a platform developed by Scale AI – at the AI Village at DEF CON 31.

GET FOX BUSINESS ON THE GO BY CLICKING HERE

The White House also said the evaluation by AI experts and thousands of community partners would explore how the models align with the National Institute of Standards and Technology AI Risk Management Framework.

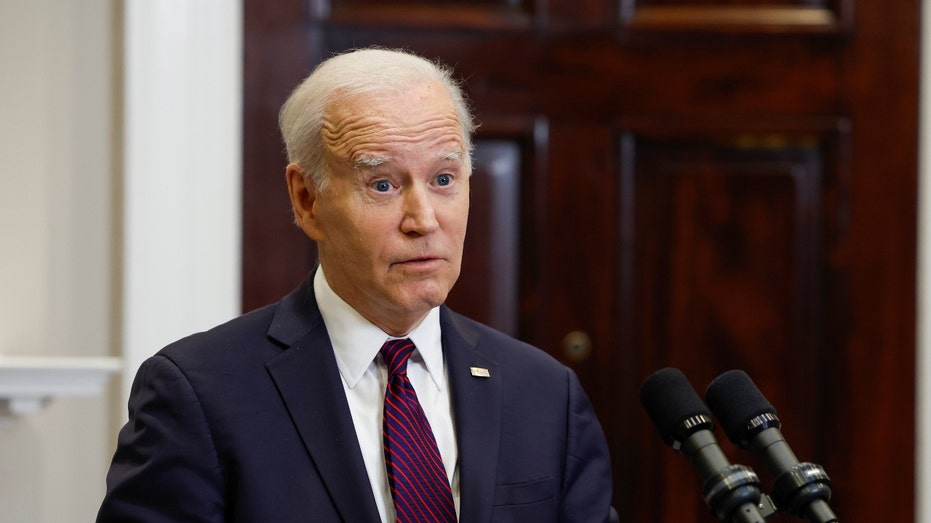

President Joe Biden delivers remarks at the White House on May 9, 2023. (Anna Moneymaker / Getty Images)

“This independent exercise will provide critical information to researchers and the public about the impacts of these models, and will enable AI companies and developers to take steps to fix issues found in those models. Testing of AI models independent of government or the companies that have developed them is an important component in their effective evaluation,” the White House said.

While some official “red teams” are already authorized by companies to attack the models, others are hobbyists.

CLICK HERE TO READ MORE ON FOX BUSINESS

This year’s event is the first to tackle the large language models that have drawn public attention in recent months.

“This is a direct pipeline to give feedback to companies,” Chowdhury, who was previously the head of Twitter’s AI ethics team, said. “It’s not like we’re just doing this hackathon and everybody’s going home. We’re going to be spending months after the exercise compiling a report, explaining common vulnerabilities, things that came up, patterns we saw.”

The Associated Press contributed to this report.