As far back as he can remember, Abhijith Gandrakota has felt a natural pull toward physics. “For me, it’s a fundamental thing,” he says. “It’s simple.”

As an undergraduate he was drawn to theory, but he quickly switched to experiment.

“Theory was good, but I was driven to experimental particle physics because even if I write a theory, someone has to test it anyway,” says Gandrakota, who is now a postdoc at the US Department of Energy’s Fermi National Accelerator Laboratory. “I’d rather be the person who tests and finds stuff than the person who predicts it.”

But he never lost his soft spot for theoretical physics. Today, Gandrakota and his colleagues on the CMS experiment are developing a machine-learning tool that will allow theorists even more freedom and creativity.

Instead of hunting for specific signatures of new physics in the data the CMS detector collects from collisions in the Large Hadron Collider, the new tool will search for rare anomalies. “It’s a bottom-up approach,” he says. “We can show theorists all the weird things that are happening and try to understand what theories could describe them.”

According to Fermilab fellow Jennifer Ngadiuba, it’s a paradigm shift. “It’s the most interesting problem we can tackle,” she says. “Now is the time to do it.”

At the International Conference for High Energy Physics in July, the team presented the first dataset collected using their anomaly-detection tool. “We are now taking data after several years of development by many different people,” Ngadiuba said during the presentation. “It’s actually triggering on unique events that would be lost otherwise.”

The next step is to analyze the data and see if any unexpected patterns emerge. Ngadiuba, Gandrakota and their colleagues hope that this new data sorting mechanism will expand the reach of the CMS experiment beyond the limits of the human imagination and give theorists the ability to think about physics in a new way.

Anomaly detection

The vast majority of collisions in the LHC—more than 99.99%—produce particles and physical phenomena that were more interesting 50 years ago but today are well understood. What physicists are really interested in is rarity—the one-in-a-billion event that shows something new or unexpected. Luckily, one-in-a-billion is a regular occurrence at the LHC; the CMS experiment views about a billion proton-proton collisions every second.

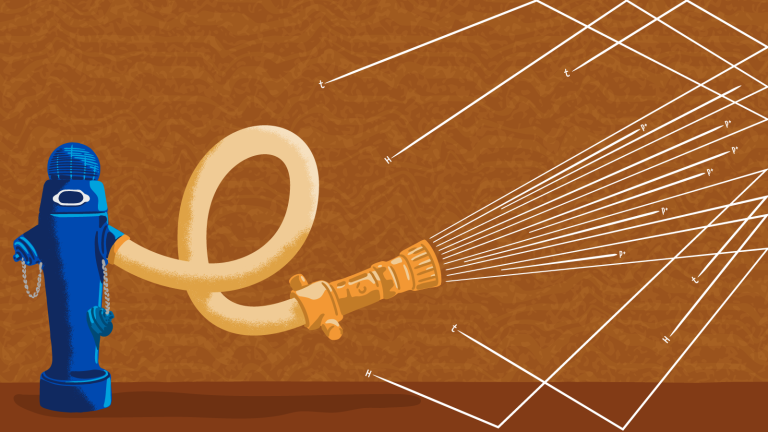

To sort the exceptional from the mundane, scientists install trigger systems in their detectors. Triggers are a series of data filters that gradually tease out the events that look interesting from those that look average. Because computing resources are limited, events that don’t make the cut are immediately thrown away.

“It’s like a pipe; only a certain amount of water can flow through it,” Gandrakota says. “If there’s too much water, the pipe will burst.”

Saving only the best specimens keeps the data flowing, but it also causes physicists anxiety. “If we don’t record it, it’s gone forever,” says Javier Duarte, a professor at the University of California, San Diego.

Currently, physicists have a list of around 100 features that qualify an event as interesting enough to save. For instance, because the most exciting types of physics often involve particles with a huge mass, the triggers are programmed to look for their decay products: an eruption of massive and high-energy particles all coming from a single origin. (Events with just a few sparkles in the calorimeter are chucked.)

However, physicists know that new particles won’t necessarily all be goliaths like the Higgs boson and top quark; new particles could be slipping through the cracks simply because of their masses. “We cannot see things that are below a certain energy because we do not trigger on those events,” Ngadiuba says. “We’re starting to think about new physics models that have particles with a very low energy.”

According to Ngadiuba, the problem with triggering on low-energy events is that nobody knows what defining characteristics could separate potentially interesting signatures from the millions of “uninteresting” collisions that also produce low-energy particles.

That’s why anomaly detection flips the script.

“We normally start by looking for a theory: seeing if one kind of particle exists,” Gandrakota says. “We were always siloed looking for a specific thing. But what if we do the opposite: What if we search for background and trigger on anything that looks weird?”

It’s in the air

Ngadiuba started her career in physics by building and testing particle detectors for CMS but made the switch to machine learning during a postdoc at CERN in 2017. “Things are changing in society,” Ngadiuba says. “Facial recognition, chatbots, self-driving cars; it’s in the air.”

Like a self-driving car, an anomaly-detection trigger must cruise along unencumbered when everything is as expected and then quickly react when something strange happens. But whereas a self-driving car must react within seconds, an anomaly detection trigger must react within nanoseconds. “In many ways, it’s even more challenging than a self-driving car,” Ngadiuba says.

In Ngadiuba’s original feasibility study, CMS scientists had been able to get close to the necessary speed, but not close enough. They needed people power and support from the experiment to push beyond this threshold.

Gandrakota arrived at Fermilab in 2021 as a postdoc. He had dabbled in anomaly detection before, but his hopes were tempered by its seeming lack of feasibility. “It was on my radar, but I was skeptical,” he says. “People have been discussing anomaly detection since 2018, but it’s always been with data that was already recorded and never at the triggering level. I didn’t think it was possible to implement anomaly detection into the trigger before the end of LHC Run 3.”

Then he saw one of the papers Ngadiuba contributed to on that very topic. “Not only was it possible,” he says, “but they demonstrated that we can do this for the current data-taking period at CMS.

“It was inspiring; we can make an impact right now rather than a few years later.”

Gandrakota reached out to Ngadiuba to see how he could get involved.

Building a team

Ngadiuba and Gandrakota started by presenting the idea during CMS meetings. They caught the attention of several professors, who joined the project and offered help from their postdocs and graduate students.

“As we convinced more and more people, it became easier,” Gandrakota says. “Many people took on an active role in this project.”

One of those new team members was University of Colorado graduate student Noah Zipper. “It’s rare that we come up with an idea this new,” Zipper says. “I jumped at the chance to work on it.”

After building the team, the next step was research and development. “We needed to explore all the possibilities of what can be done and how to make the algorithm better,” Gandrakota says.

They needed to teach their machine-learning algorithm how to identify “this looks normal” from “this looks weird.”

To do this, the scientists fed the algorithm real data and had it look for patterns. “As humans, if we see a car on the road, we immediately know that it’s a car.” Gandrakota says. “We learn what a car looks like when we’re little kids; we don’t need to know the brand or the model to give it that label.”

The algorithm was exposed to 10 million collisions that were randomly recorded by CMS. Instead of telling the algorithm what looks weird, the scientists let the algorithm figure it out for itself.

“If we’ve seen thousands of cars on the road, we expect to see more cars. But if we suddenly see someone riding a horse, that would register as weird,” Gandrakota says. “Our trigger works the same way.”

To make the algorithm fast, they played with data compression. The LHC produces around one petabyte of data every second. As the data is processed in the neural network, it gains in complexity, which slows everything down. The question was how to minimize complexity without losing accuracy.

“A neural network [a type of machine learning] is a bunch of multiplications and additions,” Ngadiuba says. “If we round 3.001 to 3, it doesn’t matter because the result is still the same. But if we round 0.001 to 0, you start losing precision and this gets propagated throughout the neural network.”

To work through this problem, Ngadiuba and her colleagues partnered with Google on a new tool called QKeras, which allowed them to minimize their computing footprint while maximizing their accuracy.

Next came marrying the algorithm with the hardware. Most personal computers use CPUs, or computing processing units, which are very powerful, but cannot operate on a nanosecond timescale. Since the trigger has less than 50 nanoseconds to make a decision, the team had to program the machine-learning algorithms onto Field Programmable Gate Arrays, or FPGAs.

“FPGAs operate like an assembly line; instead of one person working on the full problem, there are many people working in parallel on different parts of the problem,” Ngadiuba says.

But FPGAs can be very difficult to program. “A few years ago, we would have needed really experienced electrical engineers to do this,” Zipper says.

Luckily, Ngadiuba, Duarte and their colleagues had recently developed a tool that allows physicists to program FPGAs directly without needing external expertise. “We can use standard machine-learning 101 code and put it on a chip that reads data 40 million times a second and makes decisions within 50 nanoseconds,” Zipper says. “It’s remarkable that they developed something like that.”

Finally, it was time to gauge its performance. “Before we use it to collect data, we need to make sure it doesn’t break the data pipeline,” Gandrakota says. “We had to test it to make sure it was sustainable.”

The team installed their tool on a special server in the CMS cavern where it could watch and evaluate the LHC collisions without actually recording any data. This was an important proof-of-principle step since no experiment had ever attempted something similar.

“If something goes wrong, it’s a big deal,” Zipper says. “The window for data-taking is relatively short compared to the lifetime of these experiments, and so when collisions are happening, the trigger system has to be working.”

After a successful test drive, the team had a few months to finalize their tool before installing it directly into the CMS trigger system. By May, they were live.

According to Duarte, anomaly detection can be up to seven times more powerful than traditional methods at finding and isolating the signals of new particles. “This shows how powerful this can be when you’re not sure what signal you’re looking for,” Duarte said at ICHEP.

From the quadrillions of collisions generated by the LHC this spring and summer, the tool has already collected roughly 3 billion events. “When we looked at the data it collected, there were no weird spikes or dead time,” Zipper says. “This is what we wanted.”

According to Ngadiuba, anomaly detection will change the conversation with the theory community.

“Theorists normally build models based on what the detector can see and what we’re already able to trigger on—not necessarily based on the physics motivation,” Ngadiuba says. “We’re now asking theorists what theoretical models our detector is not able to see because of the trigger. It’s a completely new way of thinking.”